By Andrew Maximow, Chief Drone Officer

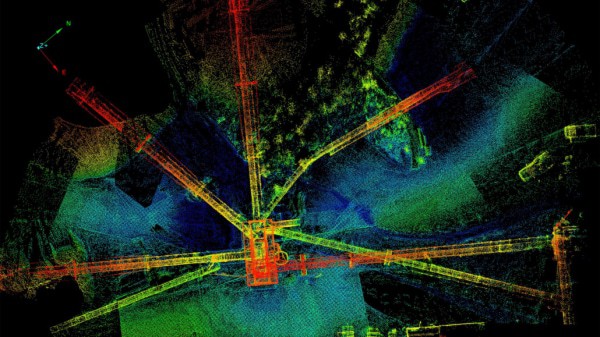

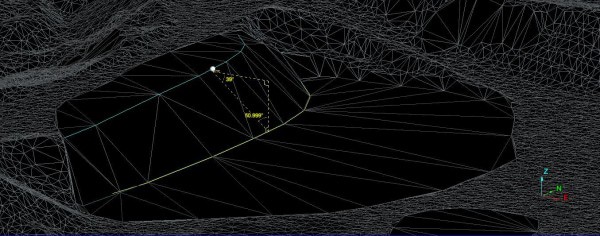

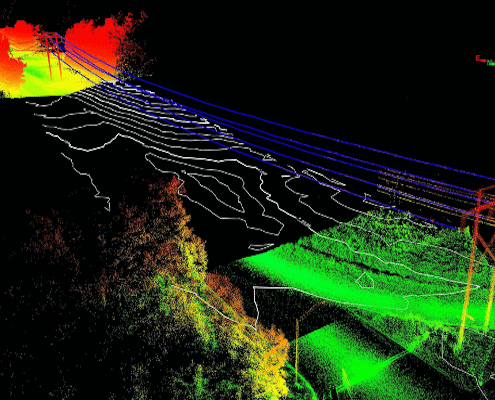

Mapping is one of the least understood applications for drones, particularly when it comes to deriving business insights that are quantitative rather than qualitative in nature. Let’s get some terms defined first. When I talk about quantitative deliverables, I mean deliverables that are based on measurements and analytics, which demand a high level of geospatial accuracy and precision. When I talk about mapping, I am referring to the technique of using photogrammetry to create 2D and 3D digital models via a series of overlapping photos captured by a drone flying over a large object, such as a building, or a predetermined area such as a mining quarry. By knowing the position and orientation of the camera, combined with other parameters and fancy geometry, software is able to process the individual photos into a larger map. This is commonly referred to as “stitching.” The stitched images are then processed into a 3D model called a point cloud consisting of many individual points, each with x,y,z coordinates, that define the model in 3D space.

Maps are very useful for many industries, but if your customers require accurate measurements, additional controls are needed beyond simple photo processing.

By definition, photogrammetry is an approximation and is prone to cumulative errors, given the complexity and sheer number components that need to work in harmony. For example, a miniscule error in camera focal length, combined with slight camera lens distortion, GPS errors, high wind, and shifts in drone & camera position could add up cumulatively to translate into substantial errors in client deliverables. Reviewing the individual specifications and proving the impact of these variables on a clients’ end result is beyond the scope of this piece. However, rest assured that Firmatek investigates the underlying issues resulting in a best practice recommendation.

Instead of dwelling on the inadequacies of many drone systems, I’ll focus on what is needed in a drone mapping system to produce the accurate quantitative deliverables used to produce trusted insights. The following system and component level recommendations are minimum requirements for quality data collection.

1. Photo Geo-referencing & Ground Control

Photo geo-referencing refers to the accurate position of photos in 3D space. Most common off the shelf (COTS) drones have standard GPS units that are accurate to within +/- 10 meters, which is not good enough if your accuracy needs require results that are accurate within several centimeters. Some newer COTS drones, such as the DJI P4-RTK, senseFly eBEE, or FireFly6Pro, use RTK or PPK GPS correction systems to establish more accurate data geo-referencing and more accurate drone navigation that is heavily dependent on GPS.

Prior to RTK & PPK integration, it was necessary to use ground control targets that have been accurately surveyed-in and were identifiable in the imagery collected by the drone. Several targets were necessary around the perimeter and in the center of the area being mapped because the photo processing software has a tendency to cause warping or scaling problems during the approximation or “stitching process”. Ground control helps correct this and ground-truth the results.

2. Exterior Orientation

Exterior orientation refers to the position of the camera in space and direction of view. This is determined by a variety of sensors providing input to the aircraft flight and navigational systems. These systems are complex, but essentially depend on the input from GPS, compass, accelerometers, and gyros to determine exterior orientation. RTK and PPK GPS corrections help ensure accurate position and direction of view.

3. Internal Orientation and RGB Camera Qualifications

Internal orientation refers to internal camera specification and parameters, such as focal length and lens distortions. This is one of the least understood topics, yet one of the most important, especially when industry assumptions are that any off the shelf digital camera is adequate. At Firmatek, we calibrate each and every RGB camera to determine it’s unique camera characteristics that are applied for every data-set within Pix4D or PhotoScan photo processing software. It’s not really a calibration per se, but a set of derived parameters designed to compensate for focal length and lens distortion variability between drone cameras. At a minimum, RGB cameras used for quantitative mapping applications should have the following features:

- Mechanical shutter

- 1” CMOS sensor is industry standard

- Ability to electronically capture Mid Exposure Pulse (i.e. the exact moment the shutter is triggered)

- AutoFocus should be set to, off

- Manual focus should be set to infinity

Many drone cameras have a linear rolling shutter (LRS) that tends to blur the imagery, producing less than desirable results. It worsens the photogrammetric approximation and adds to the cumulative error effect.

Debunking the Myth of Mega Pixels

Notice that I didn’t mention camera mega pixels anywhere in this discussion, a common myth in our industry. Many manufacturers tout mega pixels as the most important parameter of their drone system camera. This is more useful for still photos of video capture, but not the most important aspect of mapping.

It is also worth mentioning the limitations of photogrammetry. It is common knowledge that uniform surfaces (water, vegetation, sand, etc) are problematic for photogrammetry. Crisp edges, vertical surfaces, overhangs, and details in towers and bridges are difficult to capture as well. RGB cameras tilted at oblique angles helps to capture vertical surfaces found on buildings and other structures.

As drone-based photogrammetry becomes widely accepted, it is important to note best practices that help ensure quality, trusted insights for your clients.